iobjectspy package¶

Subpackages¶

Submodules¶

iobjectspy.analyst module¶

The ananlyst module provides commonly used spatial data processing and analysis functions. Users can use the analyst module to perform buffer analysis (create_buffer() ), overlay analysis (overlay() ),

Create Thiessen polygons (create_thiessen_polygons() ), topological facets (topology_build_regions() ), density clustering (kernel_density() ),

Interpolation analysis (interpolate() ), raster algebra operations (expression_math_analyst()) and other functions.

In all interfaces of the analyst module, the input data parameters are required to be dataset (Dataset, DatasetVector, DatasetImage, :py: class:.DatasetGrid) parameters,

Both accept direct input of a dataset object ( Dataset) or a combination of datasource alias and dataset name (for example,’alias/dataset_name’,’alias\dataset_name’), and also support datasource connection information and dataset name Combination (for example,’E:/data.udb/dataset_name’).

-Support setting dataset

>>> ds = Datasource.open('E:/data.udb') >>> create_buffer(ds['point'], 10, 10, unit='Meter', out_data='E:/buffer_out.udb')-Supports setting the combination of dataset alias and dataset name

>>> create_buffer(ds.alias +'/point' +, 10, 10, unit='Meter', out_data='E:/buffer_out.udb') >>> create_buffer(ds.alias +'\point', 10, 10, unit='Meter', out_data='E:/buffer_out.udb') >>> create_buffer(ds.alias +'|point', 10, 10, unit='Meter', out_data='E:/buffer_out.udb')-Support setting udb file path and dataset name combination

>>> create_buffer('E:/data.udb/point', 10, 10, unit='Meter', out_data='E:/buffer_out.udb')-Supports setting the combination of datasource connection information and dataset name. datasource connection information includes DCF files, xml strings, etc. For details, please refer to: py:meth:.DatasourceConnectionInfo.make

>>> create_buffer('E:/data_ds.dcf/point', 10, 10, unit='Meter', out_data='E:/buffer_out.udb')

In all the interfaces in the analyst module, the datasource (Datasource) is required for the output data parameters, all accept the Datasource object, which can also be the DatasourceConnectionInfo object.

At the same time, it also supports the alias of the datasource in the current workspace, and also supports the UDB file path, DCF file path, etc.

-Support setting udb file path

>>> create_buffer('E:/data.udb/point', 10, 10, unit='Meter', out_data='E:/buffer_out.udb')-Support setting datasource objects

>>> ds = Datasource.open('E:/buffer_out.udb') >>> create_buffer('E:/data.udb/point', 10, 10, unit='Meter', out_data=ds) >>> ds.close()-Support setting datasource alias

>>> ds_conn = DatasourceConnectionInfo('E:/buffer_out.udb', alias='my_datasource') >>> create_buffer('E:/data.udb/point', 10, 10, unit='Meter', out_data='my_datasource')

-

iobjectspy.analyst.create_buffer(input_data, distance_left, distance_right=None, unit=None, end_type=None, segment=24, is_save_attributes=True, is_union_result=False, out_data=None, out_dataset_name='BufferResult', progress=None)¶ Create a buffer of vector datasets or recordsets.

Buffer analysis is the process of generating one or more regions around space objects, using one or more distance values from these objects (called buffer radius) as the radius. Buffer can also be understood as an influence or service range of spatial objects.

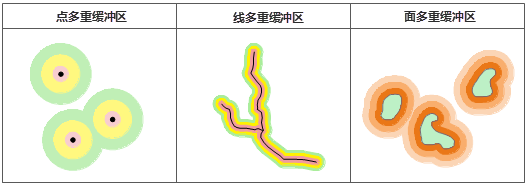

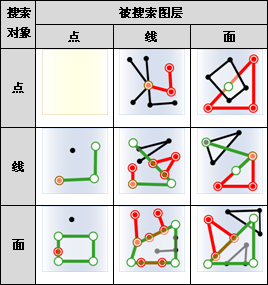

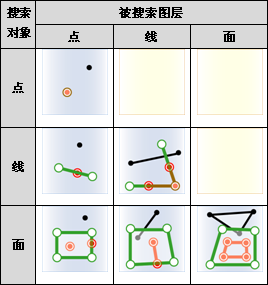

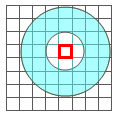

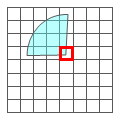

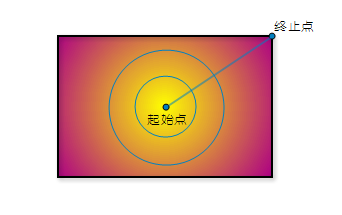

The basic object of buffer analysis is point, line and surface. SuperMap supports buffer analysis for two-dimensional point, line, and area datasets (or record sets) and network datasets. Among them, when performing buffer analysis on the network dataset, the edge segment is buffered. The type of buffer can analyze single buffer (or simple buffer) and multiple buffer. The following takes a simple buffer as an example to introduce the buffers of point, line and surface respectively.

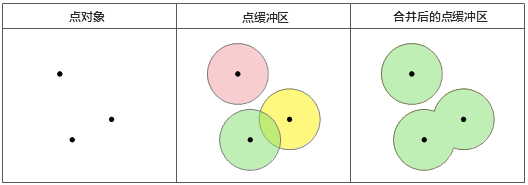

Point buffer The point buffer is a circular area generated with the point object as the center and the given buffer distance as the radius. When the buffer distance is large enough, the buffers of two or more point objects may overlap. When selecting the merge buffer, the overlapping parts will be merged, and the resulting buffer is a complex surface object.

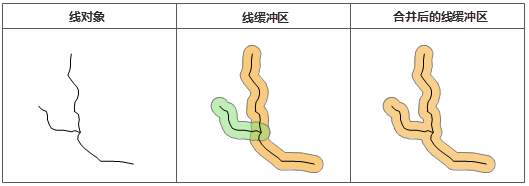

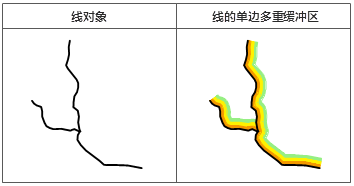

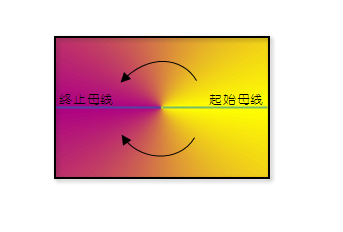

Line buffer The buffer of the line is a closed area formed by moving a certain distance to both sides of the line object along the normal direction of the line object, and joining with the smooth curve (or flat) formed at the end of the line. Similarly, when the buffer distance is large enough, the buffers of two or more line objects may overlap. The effect of the merge buffer is the same as the merge buffer of points.

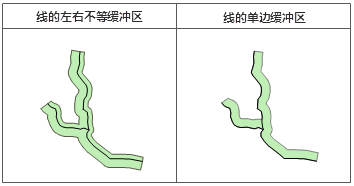

The buffer widths on both sides of the line object can be inconsistent, resulting in unequal buffers between left and right; you can also create a single-sided buffer only on one side of the line object. Only flat buffers can be generated at this time.

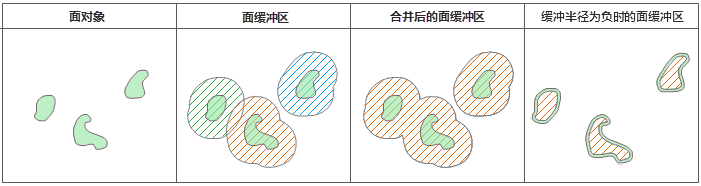

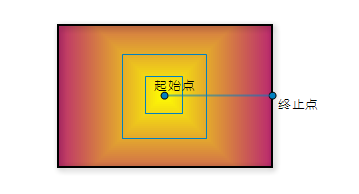

Surface buffer

The surface buffer is generated in a similar way to the line buffer. The difference is that the surface buffer only expands or contracts on one side of the surface boundary. When the buffer radius is positive, the buffer expands to the outside of the boundary of the area object; when it is negative, it shrinks inward. Similarly, when the buffer distance is large enough, the buffers of two or more line objects may overlap. You can also choose the merge buffer, the effect is the same as the merge buffer of points.

Multiple buffers refer to the creation of buffers with corresponding data volume around the geometric objects according to the given buffer radius. For line objects, you can also create unilateral multiple buffers, but note that the creation of network dataset is not supported.

Buffer analysis is often used in GIS spatial analysis, and is often combined with overlay analysis to jointly solve practical problems. Buffer analysis has applications in many fields such as agriculture, urban planning, ecological protection, flood prevention and disaster relief, military, geology, and environment.

For example, when expanding a road, you can create a buffer zone for the road according to the widening width of the road, and then superimpose the buffer layer and the building layer, and find the buildings that fall into the buffer zone and need to be demolished through overlay analysis; another example, to protect the environment And arable land, buffer analysis can be performed on wetland, forest, grassland and arable land, and industrial construction is not allowed in the buffer zone.

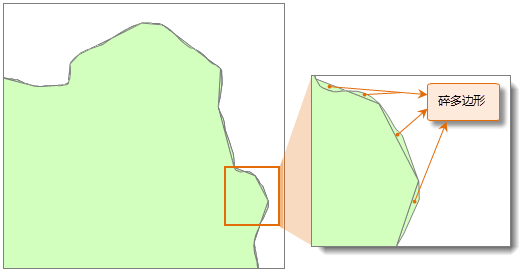

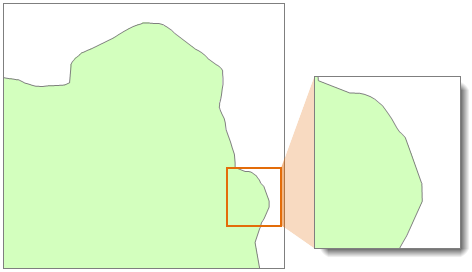

Description:

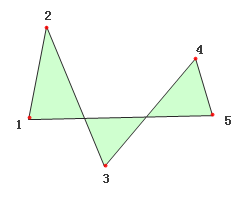

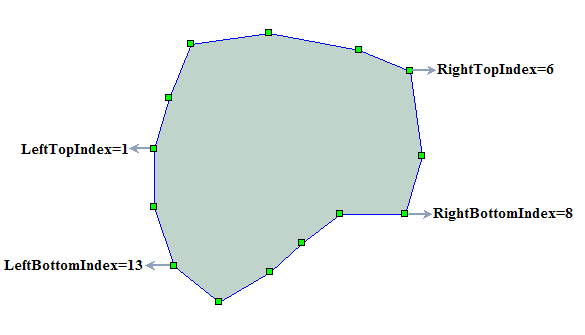

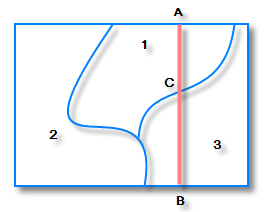

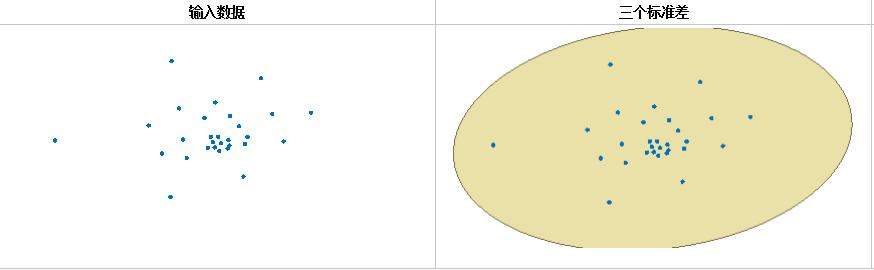

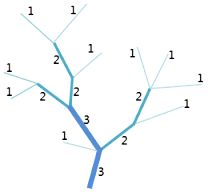

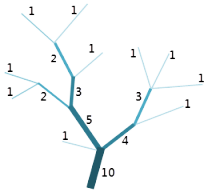

- For area objects, it is best to go through topology check before doing buffer analysis to rule out inter-plane intersections. The so-called intra-plane intersection refers to the intersection of the area object itself, as shown in the figure, the number in the figure represents the area object Node order.

Explanation of “negative radius”

- If the buffer radius is numeric, only surface data supports negative radius;

- If the buffer radius is a field or field expression, if the value of the field or field expression is negative, the absolute value is taken for point and line data; for area data, if the buffer is merged, the absolute value is taken, if If it is not merged, it will be treated as a negative radius.

Parameters: - input_data (Recordset or DatasetVector or str) – The specified source vector record set for creating the buffer is a dataset. Support point, line, area dataset and record set.

- distance_left (float or str) – (left) the distance of the buffer. If it is a string, it indicates the field where the (left) buffer distance is located, that is, each geometric object uses the value stored in the field as the buffer radius when creating the buffer. For line objects, it represents the radius of the left buffer area, for point and area objects, it represents the buffer radius.

- distance_right (float or str) – The distance of the right buffer. If it is a string, it means the field where the right buffer distance is located. That is, each line geometry object uses the value stored in the field as the right buffer radius when creating the buffer. This parameter is only valid for line objects.

- unit (Unit or str) – Buffer distance radius unit, only distance unit is supported, angle and radian unit are not supported.

- end_type (BufferEndType or str) – The end type of the buffer. It is used to distinguish whether the endpoint of the line object buffer analysis is round or flat. For point or area objects, only round head buffer is supported

- segment (int) – The number of semicircular edge segments, that is, how many line segments are used to simulate a semicircle, must be greater than or equal to 4.

- is_save_attributes (bool) – Whether to preserve the field attributes of the object for buffer analysis. This parameter is invalid when the result face dataset is merged. That is, it is valid when the isUnion parameter is false.

- is_union_result (bool) – Whether to merge the buffers, that is, whether to merge all the buffer areas generated by each object of the source data and return. For area objects, the area objects in the source dataset are required to be disjoint.

- out_data (Datasource) – The datasource to store the result data

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: result dataset or dataset name

Return type: DatasetVector or str

-

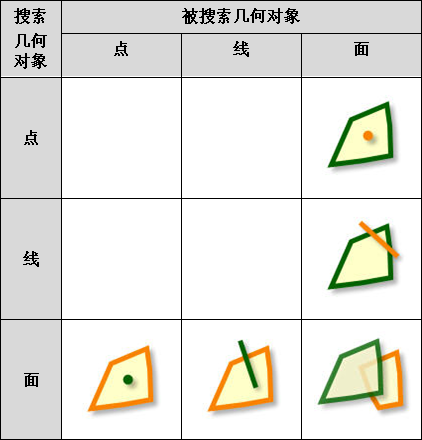

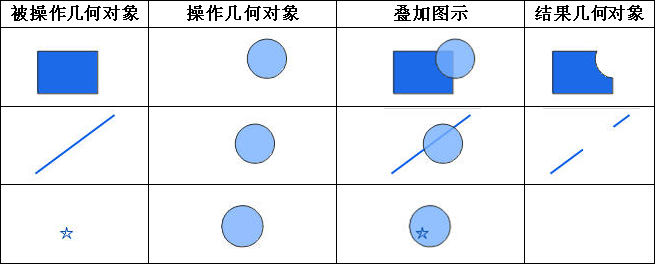

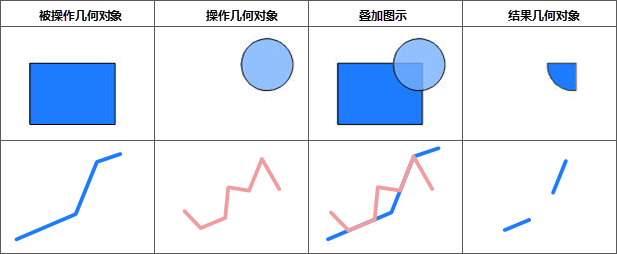

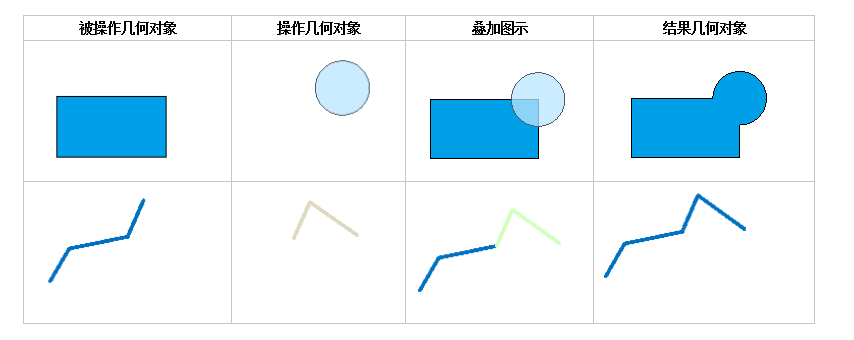

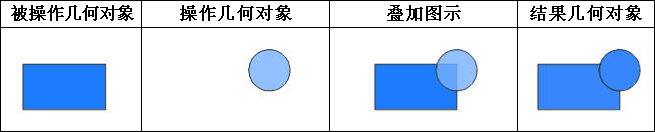

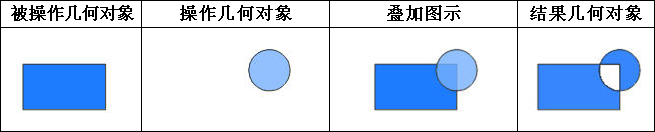

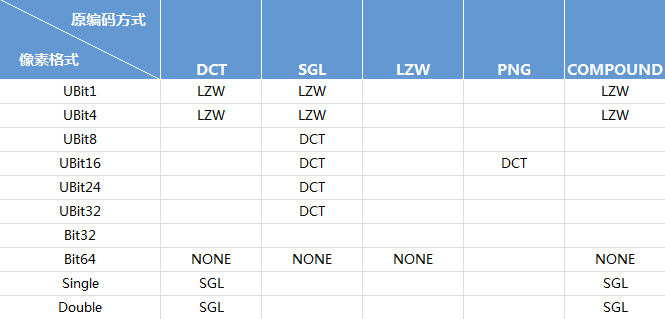

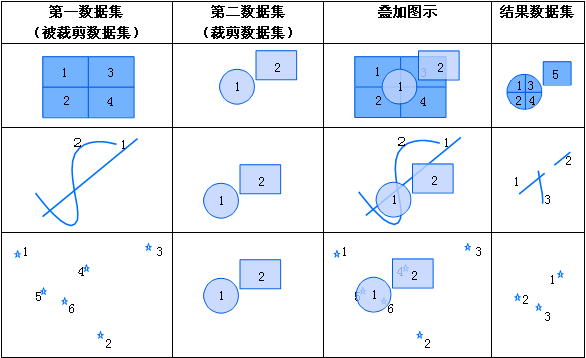

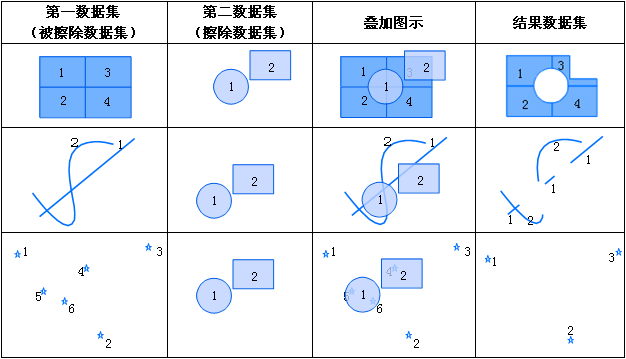

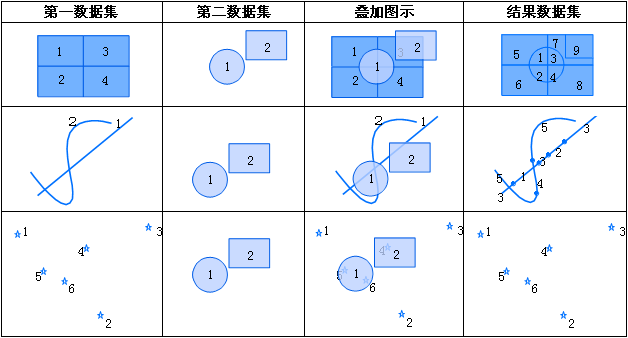

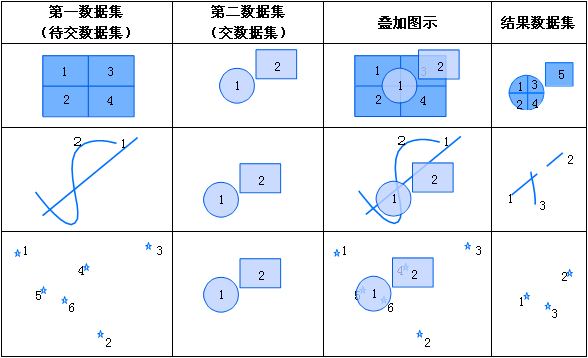

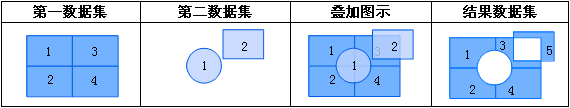

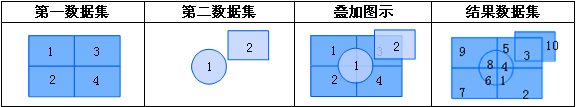

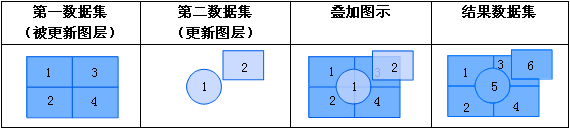

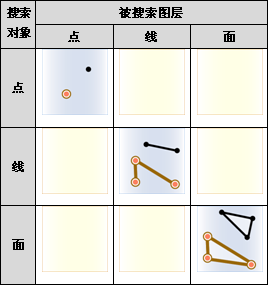

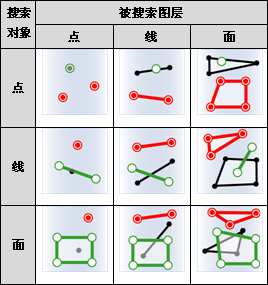

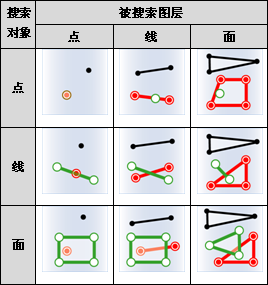

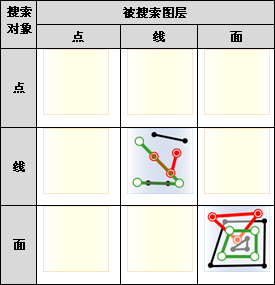

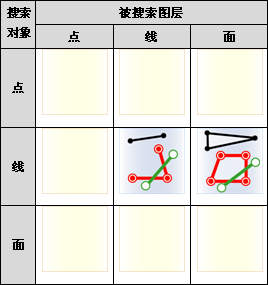

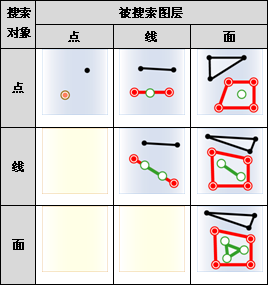

iobjectspy.analyst.overlay(source_input, overlay_input, overlay_mode, source_retained=None, overlay_retained=None, tolerance=1e-10, out_data=None, out_dataset_name='OverlayOutput', progress=None, output_type=OverlayAnalystOutputType.INPUT, is_support_overlap_in_layer=False)¶ Overlay analysis is used to perform various overlay analysis operations between the two input dataset or record sets, such as clip, erase, union, intersect, identity,xOR and update. Overlay analysis is a very important spatial analysis function in GIS. It refers to the process of generating a new dataset through a series of set operations on two datasets under the unified spatial reference system. Overlay analysis is widely used in resource management, urban construction assessment, land management, agriculture, forestry and animal husbandry, statistics and other fields. Therefore, through this superposition analysis class, the processing and analysis of spatial data can be realized, the new spatial geometric information required by the user can be extracted, and the attribute information of the data can be processed.

-The two dataset for overlay analysis are called the input dataset (called the first dataset in SuperMap GIS), and its type can be point, line, area, etc.; the other is called overlay The dataset of the dataset (referred to as the second dataset in SuperMap GIS) is generally a polygon type. -It should be noted that the polygon dataset or record set itself should avoid including overlapping areas, otherwise the overlay analysis results may be wrong. -The data for overlay analysis must be data with the same geographic reference, including input data and result data. -In the case of a large amount of data for overlay analysis, it is necessary to create a spatial index on the result dataset to improve the display speed of the data -All the results of overlay analysis do not consider the system fields of the dataset- requires attention:

- -When source_input is a dataset, overlay_input can be a dataset, a record set, and a list of surface geometry objects -When source_input is a record set, overlay_input can be a dataset, a record set, and a list of surface geometric objects -When source_input is a list of geometric objects, overlay_input can be a list of datasets, record sets and surface geometric objects -When source_input is a list of geometric objects, valid result datasource information must be set

Parameters: - source_input (DatasetVector or Recordset or list[Geometry]) – The source data of the overlay analysis, which can be a dataset, a record set, or a list of geometric objects. When the overlay analysis mode is update, xor and union, the source data only supports surface data. When the overlay analysis mode is clip, intersect, erase and identity, the source data supports point, line and surface.

- overlay_input (DatasetVector or Recordset or list[Geometry]) – The overlay data involved in the calculation must be surface type data, which can be a dataset, a record set, or a list of geometric objects

- overlay_mode (OverlayMode or str) – overlay analysis mode

- source_retained (list[str] or str) – The fields that need to be retained in the source dataset or record set. When source_retained is str, support setting’,’ to separate multiple fields, for example “field1,field2,field3”

- overlay_retained (list[str] or str) – The fields that need to be retained for the overlay data involved in the calculation. When overlay_retained is str, it is supported to set’,’ to separate multiple fields, such as “field1,field2,field3”. Invalid for CLIP and ERASE

- tolerance (float) – Tolerance value of overlay analysis

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource where the result data is saved. If it is empty, the result dataset is saved to the datasource where the overlay analysis source dataset is located.

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

- output_type (str or OverlayAnalystOutputType) – The type of the result dataset. For the intersection of faces, you can choose to return a point dataset.

- is_support_overlap_in_layer (bool) – Whether to support objects with overlapping faces in the dataset. The default is False, that is, it is not supported. If there is overlap in the face dataset, the overlay analysis result may have errors. If it is set to True, a new algorithm will be used for calculation to support the recurrence of overlap in the face dataset

Returns: result dataset or dataset name

Return type: DatasetVector or str

-

iobjectspy.analyst.multilayer_overlay(inputs, overlay_mode, output_attribute_type='ONLYATTRIBUTES', tolerance=1e-10, out_data=None, out_dataset_name='OverlayOutput', progress=None)¶ Multi-layer overlay analysis supports overlay analysis of multiple data sets or multiple record sets.

>>> ds = open_datasource('E:/data.udb') >>> input_dts = [ds['dltb_2017'], ds['dltb_2018'], ds['dltb_2019']] >>> result_dt = multilayer_overlay(input_ds,'intersect','OnlyID', 1.0e-7) >>> assert result_dt is not None True

param inputs: data set or record set participating in overlay analysis type inputs: list[DatasetVector] or list[Recordset] or list[list[Geometry]] param overlay_mode: Overlay analysis mode, only supports intersection ( OverlayMode.INTERSECT) and merge (OverlayMode.UNION)type overlay_mode: OverlayMode or str param output_attribute_type: multi-layer overlay analysis field attribute return type type output_attribute_type: OverlayOutputAttributeType or str param float tolerance: node tolerance param out_data: The data source where the result data is saved. If it is empty, the result data set is saved to the data source where the overlay analysis source data set is located. When the inputs are all arrays of geometric objects, The data source for saving the result data must be set. type out_data: Datasource or DatasourceConnectionInfo or str param str out_dataset_name: result data set name param function progress: progress information processing function, please refer to StepEventreturn: rtype:

-

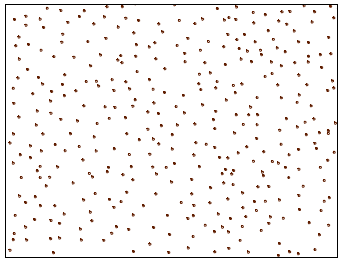

iobjectspy.analyst.create_random_points(dataset_or_geo, random_number, min_distance=None, clip_bounds=None, out_data=None, out_dataset_name=None, progress=None)¶ Randomly generate points within the geometric object. When generating random points, you can specify the number of random points and the distance between the random points. When the number of random points and the minimum distance are specified at the same time, the minimum distance will be met first. That is, the distance between the generated random points must be greater than the minimum distance, when the number may be less than the number of random points.

>>> ds = open_datasource('E:/data.udb') >>> dt = ds['dltb'] >>> polygon = dt.get_geometries('SmID == 1')[0] >>> points = create_random_points(polygon, 10) >>> print(len(points)) 10 >>> points = create_random_points(polygon, 10, 1500) >>> print(len(points)) 9 >>> assert compute_distance(points(0),points(1))> 1500 True >>> >>> random_dataset = create_random_points(dt, 10, 1500, None, ds,'random_points', None) >>> print(random_dataset.type) 'Point'

param dataset_or_geo: A geometric object or dataset used to create random points. When specified as a single geometric object, line and area geometric objects are supported. When specified as a data set, data sets of point, line, and area types are supported. type dataset_or_geo: GeoRegion or GeoLine or Rectangle or DatasetVector or str param random_number: The number of random points or the name of the field where the number of random points is located. Only random points generated in the data set can be formulated as field names. type random_number: str or float param min_distance: The minimum distance or the field name of the minimum distance of the random point. When the random point distance value is None or 0, the generated random point does not consider the distance limit between two points. When it is greater than 0, the distance between any two random points must be greater than the specified distance. At this time, the number of random points generated may not necessarily be equal to the specified number of random points. Only random points generated in the data set can be formulated as field names. type min_distance: str or float param Rectangle clip_bounds: The range for generating random points, which can be None. When it is None, random points are generated in the entire data set or geometric object. param out_data: The data source to store the result data type out_data: Datasource param str out_dataset_name: result data set name param function progress: progress information processing function, please refer to StepEventreturn: The random point list or the data set where the random point is located. When a random point is generated in a geometric object, list[Point2D] will be returned. When a random point is generated in the data set, the data set will be returned. rtype: list[Point2D] or DatasetVector or str

-

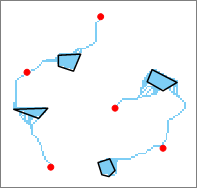

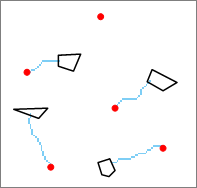

iobjectspy.analyst.regularize_building_footprint(dataset_or_geo, offset_distance, offset_distance_unit=None, regularize_method=RegularizeMethod.ANYANGLE, min_area=0.0, min_hole_area=0.0, prj=None, is_attribute_retained=False, out_data=None, out_dataset_name=None, progress=None)¶ Perform regularization processing on the area type building object to generate a regularized object covering the original area object.

>>> ds = open_datasource('E:/data.udb') >>> dt = ds['building'] >>> polygon = dt.get_geometries('SmID == 1')[0]

Regularize the geometric objects, the offset distance is 0.1, the unit is the data set coordinate system unit:

>>> regularize_result = regularize_building_footprint(polygon, 0.1, None, RegularizeMethod.ANYANGLE, >>> prj=dt.prj_coordsys) >>> assert regularize_result.type == GeometryType.GEOREGION

True

Regularize the data set:

>>> regularize_dt = regularize_building_footprint(dt, 0.1,'meter', RegularizeMethod.RIGHTANGLES, min_area=10.0, >>> min_hole_area=2.0, is_attribute_retained=False) >>> assert regularize_dt.type = DatasetType.REGION

True

param dataset_or_geo: the building area object or area dataset to be processed type dataset_or_geo: GeoRegion or Rectangle or DatasetVector or str param float offset_distance: The maximum distance that a regularized object can be offset from the boundary of its original object. With .py:attr:.offset_distance_unit, you can set the linear unit value based on the data coordinate system, and you can also set the distance unit value. param offset_distance_unit: The unit of the regularized offset distance, the default is None, that is, the unit of the data used. type offset_distance_unit: Unit or str param regularize_method: regularize method type regularize_method: RegularizeMethod or str param float min_area: The minimum area of a regularized object. Objects smaller than this area will be deleted. It is valid when the value is greater than 0. When the spatial coordinate system or the coordinate system of the dataset is projection or latitude and longitude, The area unit is square meter. When the coordinate system is None or the plane coordinate system, the area unit corresponds to the data unit. param float min_hole_area: The minimum area of the hole in the regularized object. Holes smaller than this area will be deleted. It is valid when the value is greater than 0. When the spatial coordinate system or the coordinate system of the data set is projection or latitude and longitude, the area unit is square meters, When the coordinate system is None or a plane coordinate system, the area unit corresponds to the data unit param prj: The coordinate system of the building area object. It is valid only when the input is a geometric object. type prj: PrjCoordSys or str param bool is_attribute_retained: Whether to save the attribute field value of the original object. Only valid when the input is a data set. param out_data: The data source to store the result data type out_data: Datasource param str out_dataset_name: result data set name param function progress: progress information processing function, please refer to StepEventreturn: The result is the regularized area object or area dataset. If the input is a region object, the generated result will also be a region object. When the input is a polygon dataset, the generated result is also a polygon dataset, and a status field will be generated in the generated polygon dataset. When the status field value is 0, it means that the regularization fails, and the saved object is the original object. When the status field value is 1, it means that the regularization is successful, and the saved object is a regularized object. rtype: DatasetVector or GeoRegion

-

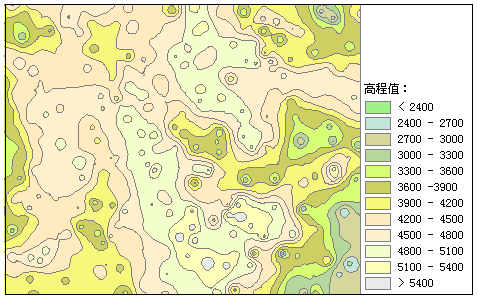

iobjectspy.analyst.tabulate_area(class_dataset, zone_dataset, class_field=None, zone_field=None, resolution=None, out_data=None, out_dataset_name=None, progress=None)¶ Tabulate the area, count the area of each category in the area, and output the attribute table. It is convenient for users to view the area summary of each category in each area. In the resulting attribute table:

-Each predicate in the regional data set has one record -Each unique value of the category data set for area statistics has a field -Each record will store the area of each category in each area .. image:: ../image/TabulateArea.png

Parameters: - class_dataset (DatasetGrid or DatasetVector or str) – The category data set for area statistics to be performed. It supports raster, point, line, and area data sets. It is recommended to use a raster dataset first. If a point or line dataset is used, the area that intersects the feature will be output.

- zone_dataset (DatasetGrid or DatasetVector or str) – The zone dataset, which supports raster and point, line and area datasets. The area is defined as all areas with the same value in the input, and the areas do not need to be connected. It is recommended to use a raster dataset first. If a vector dataset is used, it will be converted internally using “vector to raster”.

- class_field (str) – The category field of area statistics. When

class_datasetis DatasetVector, a valid category field must be specified. - zone_field (str) – Zone refers to a field. When

zone_datasetis DatasetVector, a valid zone value field must be specified. - resolution (float) –

- out_data (Datasource) – The data source to store the result data

- out_dataset_name (str) – result data set name

- progress (function) – progress information processing function, please refer to

StepEvent

Returns: Return type:

-

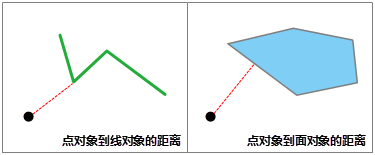

iobjectspy.analyst.auto_compute_project_points(point_input, line_input, max_distance, out_data=None, out_dataset_name=None, progress=None)¶ Automatically calculate the vertical foot from point to line.

>>> ds = open_datasource('E:/data.udb') >>> auto_compute_project_points(ds['point'], ds['line'], 10.0)

param point_input: input point data set or record set type point_input: DatasetVector or Recordset param line_input: input line data set or record set type line_input: DatasetVector or Recordset param float max_distance: Maximum query distance, the unit of distance is the same as the unit of the dataset coordinate system. When the value is less than 0, it means that the search distance is not limited. param out_data: The data source to store the result data type out_data: Datasource param str out_dataset_name: result data set name param function progress: progress information processing function, please refer to StepEventreturn: Return the point to the vertical foot of the line. rtype: DatasetVector or str

-

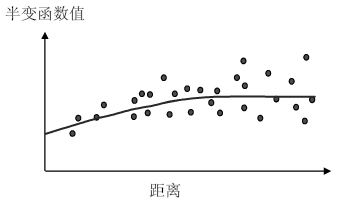

iobjectspy.analyst.compute_natural_breaks(input_dataset_or_values, number_zones, value_field=None)¶ - Calculate the natural break point. Jenks Natural Breaks (Jenks Natural Breaks) is a statistical method of grading and classification according to the numerical statistical distribution law. It can maximize the difference between classes, that is, make the variance within the group as small as possible, and the variance between groups as much as possible Big,

The features are divided into multiple levels or categories, and for these levels or categories, their boundaries will be set at locations where the data values differ relatively large.

param input_dataset_or_values: The data set or list of floating-point numbers to be analyzed. Supports raster datasets and vector datasets. type input_dataset_or_values: DatasetGrid or DatasetVector or list[float] param int number_zones: number of groups param str value_field: The name of the field used for natural break point segmentation. When the input data set is a vector data set, a valid field name must be set. return: an array of natural break points, the value of each break point is the maximum value of the group rtype: list[float]

-

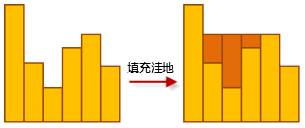

iobjectspy.analyst.erase_and_replace_raster(input_data, replace_region, replace_value, out_data=None, out_dataset_name=None, progress=None)¶ Erase and fill the raster or image data set, that is, you can modify the raster value of the specified area.

>>> region = Rectangle(875.5, 861.2, 1172.6, 520.9) >>> result = erase_and_replace_raster(data_dir +'example_data.udbx/seaport', region, (43,43,43))

Process raster data:

>>> region = Rectangle(107.352104894652, 30.1447395778174, 107.979276445055, 29.6558796240814) >>> result = erase_and_replace_raster(data_dir +'example_data.udbx/DEM', region, 100)

param input_data: raster or image dataset to be erased type input_data: DatasetImage or DatasetGrid or str param replace_region: erase region type replace_region: Rectangle or GeoRegion param replace_value: The replacement value of the erased area, use replace_value to replace the grid value in the specified erased area. type replace_value: float or int or tuple[int,int,int] param out_data: The data source where the result data set is located type out_data: Datasource or DatasourceConnectionInfo or str param str out_dataset_name: result data set name param function progress: progress information processing function, please refer to StepEventreturn: result data set or data set name rtype: DatasetGrid or DatasetImage or str

-

iobjectspy.analyst.dissolve(input_data, dissolve_type, dissolve_fields, field_stats=None, attr_filter=None, is_null_value_able=True, is_preprocess=True, tolerance=1e-10, out_data=None, out_dataset_name='DissolveResult', progress=None)¶ Fusion refers to combining objects with the same fusion field value into a simple object or a complex object. Suitable for line objects and area objects. Sub-objects are the basic objects that make up simple objects and complex objects. A simple object consists of a sub-object, That is, the simple object itself; the complex object is composed of two or more sub-objects of the same type.

Parameters: - input_data (DatasetVector or str) – The vector dataset to be fused. Must be a line dataset or a polygon dataset.

- dissolve_type (DissolveType or str) – dissolve type

- dissolve_fields (list[str] or str) – Dissolve fields. Only records with the same field value of the dissolve field will be dissolved. When dissolve_fields is str, support setting’,’ to separate multiple fields, for example “field1,field2,field3”

- field_stats (list[tuple[str,StatisticsType]] or list[tuple[str,str]] or str) – The name of the statistical field and the corresponding statistical type. stats_fields is a list, each element in the list is a tuple, the first element of the tuple is the field to be counted, and the second element is the statistics type. When stats_fields is str, it is supported to set’,’ to separate multiple fields, such as “field1:SUM, field2:MAX, field3:MIN”

- attr_filter (str) – The filter expression of the object when the dataset is fused

- tolerance (float) – fusion tolerance

- is_null_value_able (bool) – Whether to deal with objects whose fusion field value is null

- is_preprocess (bool) – Whether to perform topology preprocess

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource where the result data is saved. If it is empty, the result dataset is saved to the datasource where the input dataset is located.

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: result dataset or dataset name

Return type: DatasetVector or str

>>> result = dissolve('E:/data.udb/zones','SINGLE','SmUserID','Area:SUM', tolerance=0.000001, out_data='E:/dissolve_out.udb')

-

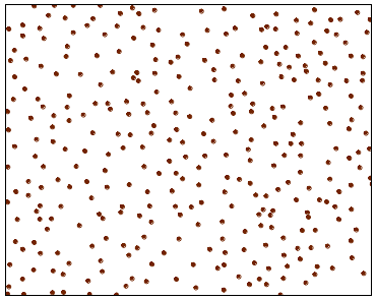

iobjectspy.analyst.aggregate_points(input_data, min_pile_point, distance, unit=None, class_field=None, out_data=None, out_dataset_name='AggregateResult', progress=None)¶ Cluster the point dataset and use the density clustering algorithm to return the clustered category or the polygon formed by the same cluster. To cluster the spatial position of the point set, use the density clustering method DBSCAN, which can divide the regions with sufficiently high density into clusters, and can find clusters of arbitrary shapes in the spatial data with noise. It defines a cluster as the largest collection of points that are connected in density. DBSCAN uses threshold e and MinPts to control the generation of clusters. Among them, the area within the radius e of a given object is called the e-neighborhood of the object. If the e-neighborhood of an object contains at least the minimum number of MinPtS objects, the object is called the core object. Given a set of objects D, if P is in the e-neighborhood of Q, and Q is a core object, we say that the object P is directly density reachable from the object Q. DBSCAN looks for clusters by checking the e-domain of each point in the data. If the e-domain of a point P contains more than MinPts points, a new cluster with P as the core object is created, and then DBSCAN repeatedly Look for objects whose density is directly reachable from these core objects and join the cluster until no new points can be added.

Parameters: - input_data (DatasetVector or str) – input point dataset

- min_pile_point (int) – The threshold for the number of density clustering points, which must be greater than or equal to 2. The larger the threshold value, the harsher the conditions for clustering into a cluster.

- distance (float) – The radius of density clustering.

- unit (Unit or str) – The unit of the density cluster radius.

- class_field (str) – The field in the input point dataset used to store the result of density clustering. If it is not empty, it must be a valid field name in the point dataset. The field type is required to be INT16, INT32 or INT64. If the field name is valid but does not exist, an INT32 field will be created. If the parameter is valid, the cluster category will be saved in this field.

- out_data (Datasource or DatasourceConnectionInfo or st) – result datasource information. The result datasource information cannot be empty with class_field at the same time. If the result datasource is valid, the result area object will be generated.

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: The result dataset or the name of the dataset. If the input result datasource is empty, a boolean value will be returned. True means clustering is successful, False means clustering fails.

Return type: DatasetVector or str or bool

>>> result = aggregate_points('E:/data.udb/point', 4, 100,'Meter','SmUserID', out_data='E:/aggregate_out.udb')

-

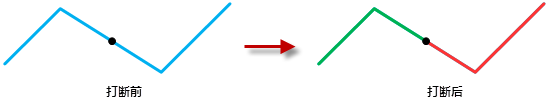

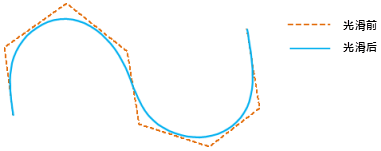

iobjectspy.analyst.smooth_vector(input_data, smoothness, out_data=None, out_dataset_name=None, progress=None, is_save_topology=False)¶ Smooth vector datasets, support line datasets, area datasets and network datasets

Smooth purpose

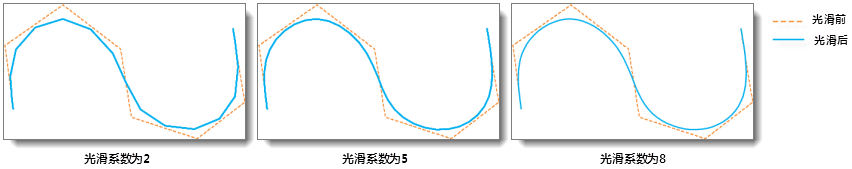

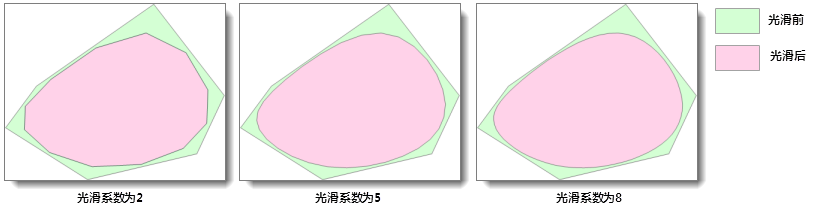

When there are too many line segments on the boundary of a polyline or polygon, it may affect the description of the original feature, and no further processing or analysis is used, or the display and printing effect is not ideal, so the data needs to be simplified. Simplified method generally include resampling (

resample_vector()) and smoothing. Smoothing is a method of replacing the original polyline with a curve or straight line segment by adding nodes. It should be noted that after smoothing the polyline, Its length usually becomes shorter, and the direction of the line segment on the polyline will also change significantly, but the relative position of the two endpoints will not change; the area of the area object will usually become smaller after smoothing.Setting of smoothing method and smoothing coefficient

This method uses B-spline method to smooth the vector dataset. For an introduction to the B-spline method, please refer to the SmoothMethod class. The smoothness coefficient (corresponding to the smoothness parameter in the method) affects the degree of smoothness, The larger the smoothing coefficient, the smoother the result data. The recommended range of smoothness coefficient is [2,10]. This method supports smoothing of line dataset, surface dataset and network dataset.

- Set the smoothing effect of different smoothing coefficients on the line dataset:

- Set the smoothing effect of different smoothing coefficients on the face dataset:

Parameters: - input_data (DatasetVector or str) – dataset that needs to be smoothed, support line dataset, surface dataset and network dataset

- smoothness (int) – The specified smoothness coefficient. A value greater than or equal to 2 is valid. The larger the value, the more the number of nodes on the boundary of the line object or area object, and the smoother it will be. The recommended value range is [2,10].

- out_data (Datasource or DatasourceConnectionInfo or str) – The radius of the result datasource. If this parameter is empty, the original data will be smoothed directly, that is, the original data will be changed. If this parameter is not empty, the original data will be copied to this datasource first, Then smooth the copied dataset. The datasource pointed to by out_data can be the same as the datasource where the source dataset is located.

- out_dataset_name (str) – The name of the result dataset. It is valid only when out_data is not empty.

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

- is_save_topology (bool) – whether to save the object topology

Returns: result dataset or dataset name

Return type: DatasetVector or str

-

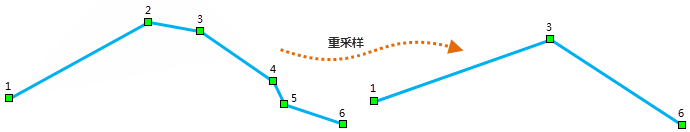

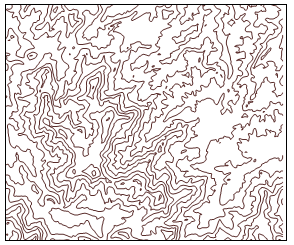

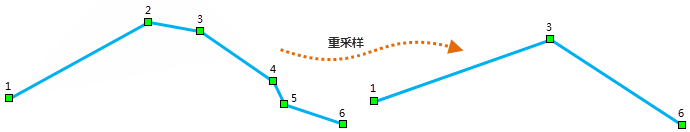

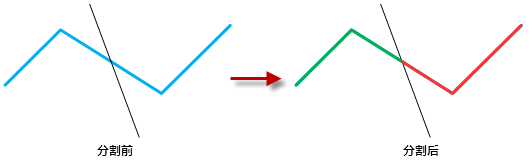

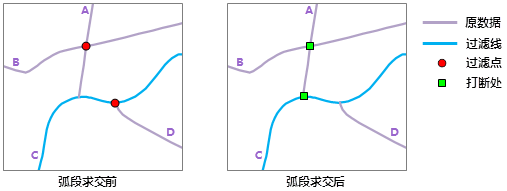

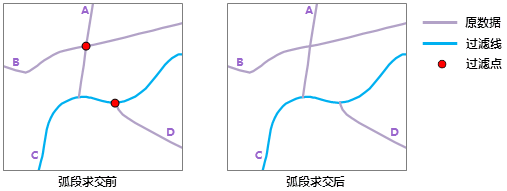

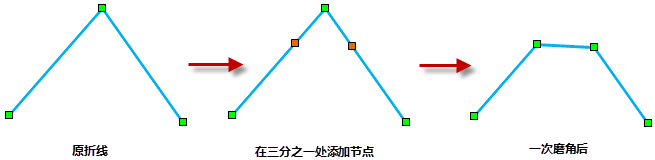

iobjectspy.analyst.resample_vector(input_data, distance, resample_type=VectorResampleType.RTBEND, is_preprocess=True, tolerance=1e-10, is_save_small_geometry=False, out_data=None, out_dataset_name=None, progress=None, is_save_topology=False)¶ Resample vector datasets, support line datasets, area datasets and network datasets. Vector data resampling is to remove some nodes according to certain rules to achieve the purpose of simplifying the data (as shown in the figure below). The result may be different due to the use of different resampling methods. SuperMap provides two resampling methods, please refer to:py:class:.VectorResampleType

This method can resample line datasets, surface datasets and network datasets. When resampling the surface dataset, the essence is to resample the boundary of the surface object. For the common boundary of multiple area objects, if topological preprocessing is performed, the common boundary of one of the polygons is resampled only once, the common boundary of other polygons will be adjusted according to the result of the polygon re-sampling to make it fit, so There will be no gaps.

Note: When the resampling tolerance is too large, the correctness of the data may be affected, such as the intersection of two polygons at the common boundary.

Parameters: - input_data (DatasetVector or str) – The vector dataset that needs to be resampled, support line dataset, surface dataset and network dataset

- distance (float) – Set the resampling distance. The unit is the same as the dataset coordinate system unit. The resampling distance can be set to a floating-point value greater than 0. But if the set value is less than the default value, the default value will be used. The larger the set re-sampling tolerance, the more simplified the sampling result data

- resample_type (VectorResampleType or str) – Resampling method. Resampling supports the haphazard sampling algorithm and Douglas algorithm. Specific reference: py:class:.VectorResampleType. The aperture sampling is used by default.

- is_preprocess (bool) – Whether to perform topology preprocess. It is only valid for face datasets. If the dataset is not topologically preprocessed, it may cause gaps, unless it can be ensured that the node coordinates of the common line of two adjacent faces in the data are exactly the same.

- tolerance (float) – Node capture tolerance when performing topology preprocessing, the unit is the same as the dataset unit.

- is_save_small_geometry (bool) – Whether to keep small objects. A small object refers to an object with an area of 0, and a small object may be generated during the resampling process. true means to keep small objects, false means not to keep.

- out_data (Datasource or DatasourceConnectionInfo or str) – The radius of the result datasource. If this parameter is empty, the original data will be sampled directly, that is, the original data will be changed. If this parameter is not empty, the original data will be copied to this datasource first, Then sample the copied dataset. The datasource pointed to by out_data can be the same as the datasource where the source dataset is located.

- out_dataset_name (str) – The name of the result dataset. It is valid only when out_data is not empty.

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

- is_save_topology (bool) – whether to save the object topology

Returns: result dataset or dataset name

Return type: DatasetVector or str

-

iobjectspy.analyst.create_thiessen_polygons(input_data, clip_region, field_stats=None, out_data=None, out_dataset_name=None, progress=None)¶ Create Tyson polygons. The Dutch climatologist AH Thiessen proposed a method of calculating the average rainfall based on the rainfall of discretely distributed weather stations, that is, connecting all adjacent weather stations into a triangle, making the vertical bisectors of each side of these triangles. Therefore, several vertical bisectors around each weather station form a polygon. Use the rainfall intensity of a unique weather station contained in this polygon to represent the rainfall intensity in this polygonal area, and call this polygon the Thiessen polygon.

Characteristics of Tyson polygons:

-Each Tyson polygon contains only one discrete point data; -The distance from the point in the Tyson polygon to the corresponding discrete point is the closest; -The distance between the point on the edge of the Thiessen polygon and the discrete points on both sides is equal. -Tyson polygons can be used for qualitative analysis, statistical analysis, proximity analysis, etc. For example, the properties of discrete points can be used to describe the properties of the Thiessen polygon area; the data of discrete points can be used to calculate the data of the Thiessen polygon area -When judging which discrete points are adjacent to a discrete point, it can be directly derived from the Thiessen polygon, and if the Thiessen polygon is n-sided, it is adjacent to n discrete points; when a certain data point falls into When in a certain Thiessen polygon, it is closest to the corresponding discrete point, and there is no need to calculate the distance.Proximity analysis is one of the most basic analysis functions in the GIS field. Proximity analysis is used to discover certain proximity relationships between things. The method of proximity analysis provided by the proximity analysis class is to realize the establishment of Thiessen polygons, which is to establish the Thiessen polygons according to the provided point data, so as to obtain the neighboring relationship between points. The Thiessen polygon is used to assign the surrounding area of the point in the point set to the corresponding point, so that any place located in the area owned by this point (that is, the Thiessen polygon associated with the point) is away from this point. It is smaller than the distance from other points. At the same time, the established Thiessen polygon also satisfies all the theories of the above-mentioned Thiessen polygon method.

How are Tyson polygons created? Use the following diagram to understand the process of creating a Tyson polygon:

-Scan the point data of the Tyson polygon from left to right and from top to bottom. If the distance between a certain point and the previously scanned point is less than the given proximity tolerance, the analysis will ignore this point; -Establish an irregular triangulation based on all points that meet the requirements after scanning and checking, that is, constructing a Delaunay triangulation; -Draw the mid-perpendicular line of each triangle side. These mid-perpendicular lines form the sides of the Tyson polygon, and the intersection of the mid-perpendicular lines is the vertex of the corresponding Tyson polygon; -The point of the point used to create the Tyson polygon will become the anchor point of the corresponding Tyson polygon.Parameters: - input_data (DatasetVector or Recordset or list[Point2D]) – The input point data, which can be a point dataset, a point record set or a list of

Point2D - clip_region (GeoRegion) – The clipping region of the specified clipping result data. This parameter can be empty, if it is empty, the result dataset will not be cropped

- field_stats (list[str,StatisticsType] or list[str,str] or str) – The name of the statistical field and the corresponding statistical type, the input is a list, each element stored in the list is a tuple, the size of the tuple is 2, the first element is the name of the field to be counted, and the second element is statistic type. When stats_fields is str, it is supported to set’,’ to separate multiple fields, such as “field1:SUM, field2:MAX, field3:MIN”

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource where the result area object is located. If out_data is empty, the generated Thiessen polygon surface geometry object will be directly returned

- out_dataset_name (str) – The name of the result dataset. It is valid only when out_data is not empty.

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: If out_data is empty, list[GeoRegion] will be returned, otherwise the result dataset or dataset name will be returned.

Return type: DatasetVector or str or list[GeoRegion]

- input_data (DatasetVector or Recordset or list[Point2D]) – The input point data, which can be a point dataset, a point record set or a list of

-

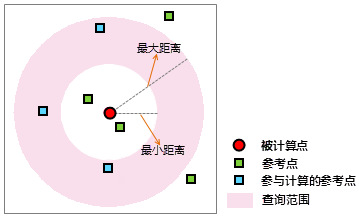

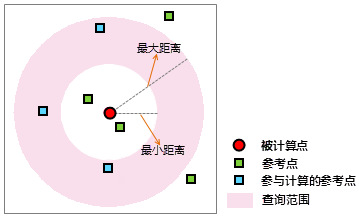

iobjectspy.analyst.summary_points(input_data, radius, unit=None, stats=None, is_random_save_point=False, is_save_attrs=False, out_data=None, out_dataset_name=None, progress=None)¶ Dilute the point dataset according to the specified distance, that is, use one point to represent all points within the specified distance. This method supports different units, and can choose the method of point thinning, and it can also do statistics on the original point set of thinning points. In the result dataset resultDatasetName, two new fields, SourceObjID and StatisticsObjNum, will be created. The SourceObjID field stores the point object obtained after thinning in the original dataset The SmID, StatisticsObjNum in represents the number of all points represented by the current point, including the point being thinned out and itself.

Parameters: - input_data (DatasetVector or str or Recordset) – point dataset to be thinned

- radius (float) – The radius of the thinning point. Take any one of the coordinates, the coordinates of all points within the radius of the point coordinates of landmark expressed through this point. Note that the unit of the radius of the thinning point should be selected.

- unit (Unit or str) – The unit of the radius of the thinning point.

- stats (list[StatisticsField] or str) – Make statistics on the original point set of the dilute points. Need to set the field name of the statistics, the field name of the statistics result and the statistics mode. When the array is empty, it means no statistics. When stats is str, support setting with’;’ Separate multiple StatisticsFields, each StatisticsField use’,’ to separate’source_field,stat_type,result_name’, for example: ‘field1,AVERAGE,field1_avg; field2,MINVALUE,field2_min’

- is_random_save_point (bool) – Whether to save the dilute points randomly. True means to randomly select a point from the point set within the thinning radius to save, False means to take the point with the smallest sum of distances from all points in the point set in the thinning radius.

- is_save_attrs (bool) – whether to retain attribute fields

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource where the result dataset is located

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: result dataset or dataset name

Return type: DatasetVector or str

-

iobjectspy.analyst.clip_vector(input_data, clip_region, is_clip_in_region=True, is_erase_source=False, out_data=None, out_dataset_name=None, progress=None)¶ The vector dataset is cropped and the result is stored as a new vector dataset.

Parameters: - input_data (DatasetVector or str) – The specified vector dataset to be cropped. It supports point, line, surface, text, and CAD dataset.

- clip_region (GeoRegion) – the specified clipping region

- is_clip_in_region (bool) – Specify whether to clip the dataset in the clipping region. If it is True, the dataset in the cropping area will be cropped; if it is False, the dataset outside the cropping area will be cropped.

- is_erase_source (bool) – Specify whether to erase the crop area. If it is True, it means the crop area will be erased. If it is False, the crop area will not be erased.

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource where the result dataset is located

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: result dataset or dataset name

Return type: DatasetVector or str

-

iobjectspy.analyst.update_attributes(source_data, target_data, spatial_relation, update_fields, interval=1e-06)¶ Update the attributes of the vector dataset, and update the attributes in source_data to the target_data dataset according to the spatial relationship specified by spatial_relation. For example, if you have a piece of point data and surface data, you need to average the attribute values in the point dataset, and then write the value to the surface object that contains the points. This can be achieved by the following code:

>>> result = update_attributes('ds/points','ds/zones','WITHIN', [('trip_distance','mean'), ('','count')])

The spatial_relation parameter refers to the spatial relationship between the source dataset (source_data) and the target updated dataset (target_data).

Parameters: - source_data (DatasetVector or str) – The source dataset. The source dataset provides attribute data, and the attribute values in the source dataset are updated to the target dataset according to the spatial relationship.

- target_data (DatasetVector or str) – target dataset. The dataset to which the attribute data is written.

- spatial_relation (SpatialQueryMode or str) – Spatial relationship type, the spatial relationship between the source data (query object) and the target data (query object), please refer to:py:class:SpatialQueryMode

- update_fields (list[tuple(str,AttributeStatisticsMode)] or list[tuple(str,str)] or str) – Field statistical information. There may be multiple source data objects that satisfy the spatial relationship with the target data object. It is necessary to summarize the attribute field values of the source data, and write the statistical results into the target dataset as a list, each in the list The element is a tuple, the size of the tuple is 2, the first element of the tuple is the name of the field to be counted, and the second element of the tuple is the statistical type.

- interval (float) – node tolerance

Returns: Whether the attribute update was successful. Return True if the update is successful, otherwise False.

Return type: bool

-

iobjectspy.analyst.simplify_building(source_data, width_threshold, height_threshold, save_failed=False, out_data=None, out_dataset_name=None)¶ Right-angle polygon fitting of surface objects If the distance from a series of continuous nodes to the lower bound of the minimum area bounding rectangle is greater than height_threshold, and the total width of the nodes is greater than width_threshold, then the continuous nodes are fitted.

Parameters: - source_data (DatasetVector or str) – the face dataset to be processed

- width_threshold (float) – The threshold value from the point to the left and right boundary of the minimum area bounding rectangle

- height_threshold (float) – The threshold value from the point to the upper and lower boundary of the minimum area bounding rectangle

- save_failed (bool) – Whether to save the source area object when the area object fails to be orthogonalized. If it is False, the result dataset does not contain the failed area object.

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource used to store the result dataset.

- out_dataset_name (str) – result dataset name

Returns: result dataset or dataset name

Return type: DatasetVector or str

-

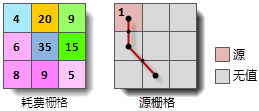

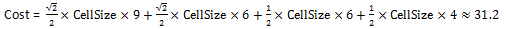

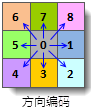

iobjectspy.analyst.resample_raster(input_data, new_cell_size, resample_mode, out_data=None, out_dataset_name=None, progress=None)¶ Raster data is resampled and the result dataset is returned.

After the raster data has undergone geometric operations such as registration, correction, projection, etc., the center position of the raster pixel will usually change. Its position in the input raster is not necessarily an integer row and column number, so it needs to be based on the output raster Based on the position of each grid in the input grid, the input grid is resampled according to certain rules, the grid value is interpolated, and a new grid matrix is established. When performing algebraic operations between raster data of different resolutions, the raster size needs to be unified to a specified resolution, and the raster needs to be resampled at this time.

There are three common methods for raster resampling: nearest neighbor method, bilinear interpolation method and cubic convolution method. For a more detailed introduction to these three resampling methods, please refer to the ResampleMode class.

Parameters: - input_data (DatasetImage or DatasetGrid or str) – The specified dataset used for raster resampling. Support image dataset, including multi-band images

- new_cell_size (float) – the cell size of the specified result grid

- resample_mode (ResampleMode or str) – Resampling calculation method

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource where the result dataset is located

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: result dataset or dataset name

Return type: DatasetImage or DatasetGrid or str

-

class

iobjectspy.analyst.ReclassSegment(start_value=None, end_value=None, new_value=None, segment_type=None)¶ Bases:

objectRaster reclassification interval class. This class is mainly used for the related settings of reclassification interval information, including the start value and end value of the interval.

This class is used to set the parameters of each reclassification interval in the reclassification mapping table during reclassification. The attributes that need to be set are different for different reclassification types.

-When the reclassification type is single value reclassification, you need to use the

set_start_value()method to specify the single value of the source raster that needs to be re-assigned, and use theset_new_value()method to set the value The corresponding new value. -When the reclassification type is range reclassification, you need to use theset_start_value()method to specify the starting value of the source raster value range that needs to be re-assigned, and use theset_end_value()method to set the range End value,And through theset_new_value()method to set the new value corresponding to the interval, you can also use theset_segment_type()method to set the interval type is “left open and right closed” or “left closed and right open”.Construct a grid reclassified interval object

Parameters: - start_value (float) – the starting value of the grid reclassification interval

- end_value (float) – the end value of the grid reclassification interval

- new_value (float) – the interval value of the grid reclassification or the new value corresponding to the old value

- segment_type (ReclassSegmentType or str) – Raster reclassification interval type

-

end_value¶ float – the end value of the raster reclassification interval

-

from_dict(values)¶ Read information from dict

Parameters: values (dict) – a dict containing ReclassSegment information Returns: self Return type: ReclassSegment

-

static

make_from_dict(values)¶ Read information from dict to construct ReclassSegment object

Parameters: values (dict) – a dict containing ReclassSegment information Returns: Raster reclassification interval object Return type: ReclassSegment

-

new_value¶ float – the interval value of the grid reclassification or the new value corresponding to the old value

-

segment_type¶ ReclassSegmentType – Raster reclassification interval type

-

set_end_value(value)¶ The end value of the grid reclassification interval

Parameters: value (float) – the end value of the grid reclassification interval Returns: self Return type: ReclassSegment

-

set_new_value(value)¶ The interval value of the grid reclassification or the new value corresponding to the old value

Parameters: value (float) – The interval value of the grid reclassification or the new value corresponding to the old value Returns: self Return type: ReclassSegment

-

set_segment_type(value)¶ Set the type of grid reclassification interval

Parameters: value (ReclassSegmentType or str) – Raster reclassification interval type Returns: self Return type: ReclassSegment

-

set_start_value(value)¶ Set the starting value of the grid reclassification interval

Parameters: value (float) – the starting value of the grid reclassification interval Returns: self Return type: ReclassSegment

-

start_value¶ float – the starting value of the grid reclassification interval

-

to_dict()¶ Output current object information to dict

Returns: dict object containing current object information Return type: dict

-

class

iobjectspy.analyst.ReclassMappingTable¶ Bases:

objectRaster reclassification mapping table class. Provides single-value or range reclassification of the source raster dataset, and includes the processing of non-valued data and unclassified cells.

The reclassification mapping table is used to illustrate the correspondence between the source data and the result data value. This corresponding relationship is expressed by these parts: reclassification type, reclassification interval set, processing of non-valued and unclassified data.

- -Types of reclassification

- There are two types of reclassification, single value reclassification and range reclassification. Single value reclassification is to re-assign certain single values. For example, a cell with a value of 100 in the source raster is assigned a value of 1 and output to the result raster;

range reclassification re-assigns a value in an interval It is a value, such as re-assigning a value of 200 to cells in the source raster whose raster value is in the range of [100,500) and output to the result raster. Set the reclassification type through the

set_reclass_type()method of this class. - -Reclassification interval collection

- The reclassified interval set specifies the corresponding relationship between a certain raster value of the source raster or a raster value in a certain interval and the new value after reclassification, which is set by the

set_segments()method of this class. This set is composed of several ReclassSegment objects. This object is used to set the information of each reclassification interval, including the start value and end value of the source raster single value or interval to be re-assigned, and the type of reclassification interval. And the interval value of the grid reclassification or the new value corresponding to the single value of the source raster, etc., see: py:class:.ReclassSegment class for details. - -Handling of non-valued and unclassified data

For the no value in the source raster data, you can set whether to keep no value through the

set_retain_no_value()method of this class. If it is False, that is, if it is not kept as no value, you can use :py:meth: The set_change_no_value_to method specifies a value for no-value data.For raster values that are not involved in the reclassification mapping table, you can use the

set_retain_missing_value()method of this class to set whether to keep its original value. If it is False, that is, not to keep the original value, you can pass: The py:meth:set_change_missing_valueT_to method specifies a value for it.

In addition, this class also provides methods for exporting reclassification mapping table data as XML strings and XML files, and methods for importing XML strings or files. When multiple input raster data needs to apply the same classification range, they can be exported as a reclassification mapping table file. When the subsequent data is classified, the reclassification mapping table file is directly imported, and the imported raster data can be processed in batches. For the format and label meaning of the raster reclassification mapping table file, please refer to the to_xml method.

-

change_missing_value_to¶ float – Return the specified value of the grid that is not within the specified interval or single value.

-

change_no_value_to¶ float – Return the specified value of no-value data

-

from_dict(values)¶ Read the reclassification mapping table information from the dict object

Parameters: values (dict) – dict object containing reclassification mapping table information Returns: self Return type: ReclassMappingTable

-

static

from_xml(xml)¶ Import the parameter value stored in the XML format string into the mapping table data, and return a new object.

Parameters: xml (str) – XML format string Returns: Raster reclassification mapping table object Return type: ReclassMappingTable

-

static

from_xml_file(xml_file)¶ Import the mapping table data from the saved XML format mapping table file and return a new object.

Parameters: xml_file (str) – XML file Returns: Raster reclassification mapping table object Return type: ReclassMappingTable

-

is_retain_missing_value¶ bool – Whether the data in the source dataset that is not in the specified interval or outside the single value retain the original value

-

is_retain_no_value¶ bool – Return whether to keep the non-valued data in the source dataset as non-valued.

-

static

make_from_dict(values)¶ Read the reclassification mapping table information from the dict object to construct a new object

Parameters: values (dict) – dict object containing reclassification mapping table information Returns: reclassification mapping table object Return type: ReclassMappingTable

-

reclass_type¶ ReclassType – Return the type of raster reclassification

-

segments¶ list[ReclassSegment] – Return the set of reclassification intervals. Each ReclassSegment is an interval range or the correspondence between an old value and a new value.

-

set_change_missing_value_to(value)¶ Set the specified value of the grid that is not within the specified interval or single value. If

is_retain_no_value()is True, this setting is invalid.Parameters: value (float) – the specified value of the grid that is not within the specified interval or single value Returns: self Return type: ReclassMappingTable

-

set_change_no_value_to(value)¶ Set the specified value of no-value data. When

is_retain_no_value()is True, this setting is invalid.Parameters: value (float) – the specified value of no value data Returns: self Return type: ReclassMappingTable

-

set_reclass_type(value)¶ Set the grid reclassification type

Parameters: value (ReclassType or str) – Raster reclassification type, the default value is UNIQUE Returns: self Return type: ReclassMappingTable

-

set_retain_missing_value(value)¶ Set whether the data in the source dataset that is not outside the specified interval or single value retain the original value.

Parameters: value (bool) – Whether the data in the source dataset that is not outside the specified interval or single value retain the original value. Returns: self Return type: ReclassMappingTable

-

set_retain_no_value(value)¶ Set whether to keep the no-value data in the source dataset as no-value. Set whether to keep the no-value data in the source dataset as no-value. -When the set_retain_no_value method is set to True, it means to keep the no-value data in the source dataset as no-value; -When the set_retain_no_value method is set to False, it means that the no-value data in the source dataset is set to the specified value (

set_change_no_value_to())Parameters: value (bool) – Returns: self Return type: ReclassMappingTable

-

set_segments(value)¶ Set reclassification interval collection

Parameters: value (list[ReclassSegment] or str) – Reclassification interval collection. When the value is str, it is supported to use’;’ to separate multiple ReclassSegments, and each ReclassSegment uses’,’ to separate the start value, end value, new value and partition type. E.g: ‘0,100,50,CLOSEOPEN; 100,200,150,CLOSEOPEN’ Returns: self Return type: ReclassMappingTable

-

to_dict()¶ Output current information to dict

Returns: a dictionary object containing current information Return type: dict

-

to_xml()¶ Output current object information as xml string

Returns: xml string Return type: str

-

to_xml_file(xml_file)¶ This method is used to write the parameter settings of the reclassification mapping table object into an XML file, which is called a raster reclassification mapping table file, and its suffix is .xml. The following is an example of a raster reclassification mapping table file:

The meaning of each label in the reclassification mapping table file is as follows:

-<SmXml:ReclassType></SmXml:ReclassType> tag: reclassification type. 1 means single value reclassification, 2 means range reclassification. -<SmXml:SegmentCount></SmXml:SegmentCount> tag: reclassification interval collection, count parameter indicates the number of reclassification levels. -<SmXml:Range></SmXml:Range> tag: reclassification interval, the reclassification type is single value reclassification, the format is: interval start value-interval end value: new value-interval type. For the interval type, 0 means left open and right closed, 1 means left closed and right open. -<SmXml:Unique></SmXml:Unique> tag: reclassification interval, reclassification type is range reclassification, the format is: original value: new value. -<SmXml:RetainMissingValue></SmXml:RetainMissingValue> tag: whether to retain the original value of unrated cells. 0 means not reserved, 1 means reserved. -<SmXml:RetainNoValue></SmXml:RetainNoValue> tag: Whether the no-value data remains no-value. 0 means not to keep, 0 means not to keep. -<SmXml:ChangeMissingValueTo></SmXml:ChangeMissingValueTo> tag: the specified value of the ungraded cell. -<SmXml:ChangeNoValueTo></SmXml:ChangeNoValueTo> tag: the specified value for no value data.

Parameters: xml_file (str) – xml file path Returns: Return True if export is successful, otherwise False Return type: bool

-

iobjectspy.analyst.reclass_grid(input_data, re_pixel_format, segments=None, reclass_type='UNIQUE', is_retain_no_value=True, change_no_value_to=None, is_retain_missing_value=False, change_missing_value_to=None, reclass_map=None, out_data=None, out_dataset_name=None, progress=None)¶ Raster data is reclassified and the result raster dataset is returned. Raster reclassification is to reclassify the raster values of the source raster data and assign values according to new classification standards. The result is to replace the original raster values of the raster data with new values. For known raster data, sometimes it is necessary to reclassify it in order to make it easier to see the trend, find the rule of raster value, or to facilitate further analysis:

-Through reclassification, the new value can be used to replace the old value of the cell to achieve the purpose of updating the data. For example, when dealing with changes in land types, assign new grid values to wasteland that has been cultivated as cultivated land; -Through reclassification, a large number of grid values can be grouped and classified, and cells in the same group are given the same value to simplify the data. For example, categorize dry land, irrigated land, and paddy fields as agricultural land; -Through reclassification, a variety of raster data can be classified according to uniform standards. For example, if the factors affecting the location of a building include soil and slope, the input raster data of soil type and slope can be reclassified according to the grading standard of 1 to 10, which is convenient for further location analysis; -Through reclassification, you can set some cells that you don’t want to participate in the analysis to have no value, or you can add new measured values to the cells that were originally no value to facilitate further analysis and processing.For example, it is often necessary to perform slope analysis on the raster surface to obtain slope data to assist in terrain-related analysis. But we may need to know which grade the slope belongs to instead of the specific slope value, to help us understand the steepness of the terrain, so as to assist in further analysis, such as site selection and road paving. At this time, you can use reclassification to divide different slopes into corresponding classes.

Parameters: - input_data (DatasetImage or DatasetGrid or str) – The specified dataset used for raster resampling. Support image dataset, including multi-band images

- re_pixel_format (ReclassPixelFormat) – The storage type of the raster value of the result dataset

- segments (list[ReclassSegment] or str) – Reclassification interval collection. Reclassification interval collection. When segments are str, it is supported to use’;’ to separate multiple ReclassSegments, and each ReclassSegment uses’,’ to separate the start value, end value, new value and partition type. For example: ‘0,100,50,CLOSEOPEN; 100,200,150,CLOSEOPEN’

- reclass_type (ReclassType or str) – raster reclass type

- is_retain_no_value (bool) – Whether to keep the no-value data in the source dataset as no-value

- change_no_value_to (float) – The specified value of no value data. When is_retain_no_value is set to False, the setting is valid, otherwise it is invalid.

- is_retain_missing_value (bool) – Whether the data in the source dataset that is not in the specified interval or outside the single value retain the original value

- change_missing_value_to (float) – The specified value of the grid that is not within the specified interval or single value. When is_retain_no_value is set to False, the setting is valid, otherwise it is invalid.

- reclass_map (ReclassMappingTable) – Raster reclassification mapping table class. If the object is not empty, use the value set by the object to reclassify the grid.

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource where the result dataset is located

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: result dataset or dataset name

Return type: DatasetGrid or DatasetImage or str

-

iobjectspy.analyst.aggregate_grid(input_data, scale, aggregation_type, is_expanded, is_ignore_no_value, out_data=None, out_dataset_name=None, progress=None)¶ Raster data aggregation, Return the result raster dataset. The raster aggregation operation is a process of reducing the raster resolution by an integer multiple to generate a new raster with a coarser resolution. At this time, each pixel is aggregated from a group of pixels in the original raster data, and its value is determined by the value of the original raster contained in it. It can be the sum, maximum, minimum, and average of the raster. Value, median. If it is reduced by n (n is an integer greater than 1) times, the number of rows and columns of the aggregated grid is 1/n of the original grid, that is, the cell size is n times the original. Aggregation can achieve the purpose of eliminating unnecessary information or deleting minor errors by generalizing the data.

Note: If the number of rows and columns of the original raster data is not an integer multiple of scale, use the is_expanded parameter to handle the fraction.

-If is_expanded is true, add a number to the zero to make it an integer multiple. The raster values of the expanded range are all non-valued. Therefore, the range of the result dataset will be larger than the original.

-If is_expanded is false, if the fraction is removed, the range of the result dataset will be smaller than the original.

Parameters: - input_data (DatasetGrid or str) – The specified raster dataset for aggregation operation.

- scale (int) – The ratio of the specified grid size between the result grid and the input grid. The value is an integer value greater than 1.

- aggregation_type (AggregationType) – aggregation operation type

- is_expanded (bool) – Specify whether to deal with fractions. When the number of rows and columns of the original raster data is not an integer multiple of the scale, fractions will appear at the grid boundary.

- is_ignore_no_value (bool) – The calculation method of the aggregation operation when there is no value data in the aggregation range. If it is True, use the rest of the grid values in the aggregation range except no value for calculation; if it is False, the aggregation result is no value.

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource where the result dataset is located

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: result dataset or dataset name

Return type: DatasetGrid or str

-

iobjectspy.analyst.slice_grid(input_data, number_zones, base_output_zones, out_data=None, out_dataset_name=None, progress=None)¶ Natural segmentation and reclassification is suitable for unevenly distributed data.

Jenks natural interruption method:

The reclassification method uses the Jenks natural discontinuity method. The Jenks natural discontinuity method is based on the natural grouping inherent in the data. This is a form of variance minimization grading. The discontinuity is usually uneven, and the discontinuity is selected where the value changes drastically, so this method can appropriately group similar values and enable Maximize the difference between each class. The Jenks discontinuous point classification method puts similar values (clustering values) in the same category, so this method is suitable for unevenly distributed data values.

Parameters: - input_data (DatasetGrid or str) – The specified raster dataset to be reclassified.

- number_zones (int) – The number of zones to reclassify the raster dataset.

- base_output_zones (int) – The value of the lowest zone in the result raster dataset

- out_data (Datasource or DatasourceConnectionInfo or str) – The datasource where the result dataset is located

- out_dataset_name (str) – result dataset name

- progress (function) – progress information processing function, please refer to:py:class:.StepEvent

Returns: result dataset or dataset name

Return type: DatasetGrid or str

Set the number of grading regions to 9, and divide the minimum to maximum raster data to be graded into 9 naturally. The lowest area value is set to 1, and the value after reclassification is increased by 1 as the starting value.

>>> slice_grid('E:/data.udb/DEM', 9, 1,'E:/Slice_out.udb')

-

iobjectspy.analyst.compute_range_raster(input_data, count, progress=None)¶ Calculate the natural breakpoint break value of the raster cell value

Parameters: - input_data (DatasetGrid or str) – raster dataset

- count (int) – the number of natural segments

- progress – progress information processing function, please refer to:py:class:.StepEvent

Returns: The break value of the natural segmentation (including the maximum and minimum values of the pixel)

Return type: Array

-

iobjectspy.analyst.compute_range_vector(input_data, value_field, count, progress=None)¶ Calculate vector natural breakpoint interrupt value

Parameters: - input_data (DatasetVector or str) – vector dataset

- value_field (str) – standard field of the segment

- count (int) – the number of natural segments

- progress – progress information processing function, please refer to:py:class:.StepEvent

Returns: The break value of the natural segment (including the maximum and minimum values of the attribute)

Return type: Array

-

class

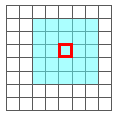

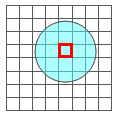

iobjectspy.analyst.NeighbourShape¶ Bases:

objectNeighbourhood shape base class. Neighborhoods can be divided into rectangular neighbourhoods, circular neighbourhoods, circular neighbourhoods and fan-shaped neighbourhoods according to their shapes. Neighbourhood shapes Related parameter settings

-

shape_type¶ NeighbourShapeType – Neighborhood shape type for domain analysis

-

-

class

iobjectspy.analyst.NeighbourShapeRectangle(width, height)¶ Bases:

iobjectspy._jsuperpy.analyst.sa.NeighbourShapeRectangular neighborhood shape class

Construct a rectangular neighborhood shape class object

Parameters: - width (float) – the width of the rectangular neighborhood

- height (float) – the height of the rectangular neighborhood

-

height¶ float – height of rectangular neighborhood

-

set_height(value)¶ Set the height of the rectangular neighborhood

Parameters: value (float) – the height of the rectangular neighborhood Returns: self Return type: NeighbourShapeRectangle

-

set_width(value)¶ Set the width of the rectangular neighborhood

Parameters: value (float) – the width of the rectangular neighborhood Returns: self Return type: NeighbourShapeRectangle

-

width¶ float – the width of the rectangular neighborhood

-

class

iobjectspy.analyst.NeighbourShapeCircle(radius)¶ Bases:

iobjectspy._jsuperpy.analyst.sa.NeighbourShapeCircular neighborhood shape class

Construct a circular neighborhood shape class object

Parameters: radius (float) – the radius of the circular neighborhood -

radius¶ float – radius of circular neighborhood

-

set_radius(value)¶ Set the radius of the circular neighborhood

Parameters: value (float) – the radius of the circular neighborhood Returns: self Return type: NeighbourShapeCircle

-

-

class

iobjectspy.analyst.NeighbourShapeAnnulus(inner_radius, outer_radius)¶ Bases:

iobjectspy._jsuperpy.analyst.sa.NeighbourShapeCircular neighborhood shape class

Construct circular neighborhood shape objects

Parameters: - inner_radius (float) – inner ring radius

- outer_radius (float) – outer ring radius

-

inner_radius¶ float – inner ring radius

-

outer_radius¶ float – outer ring radius

-

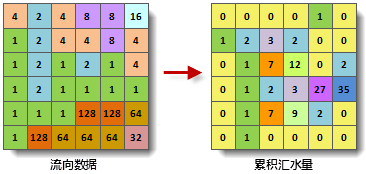

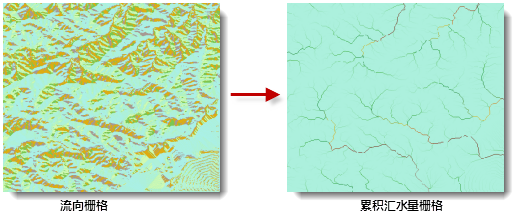

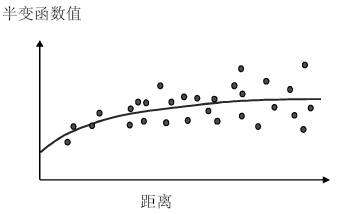

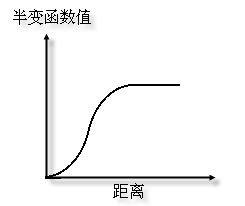

set_inner_radius(value)¶ Set inner ring radius